AdaBoost Gradient Boosting and XGBoost. Both AdaBoost and Gradient Boosting build weak learners in a sequential fashion.

Catboost Vs Light Gbm Vs Xgboost Kdnuggets

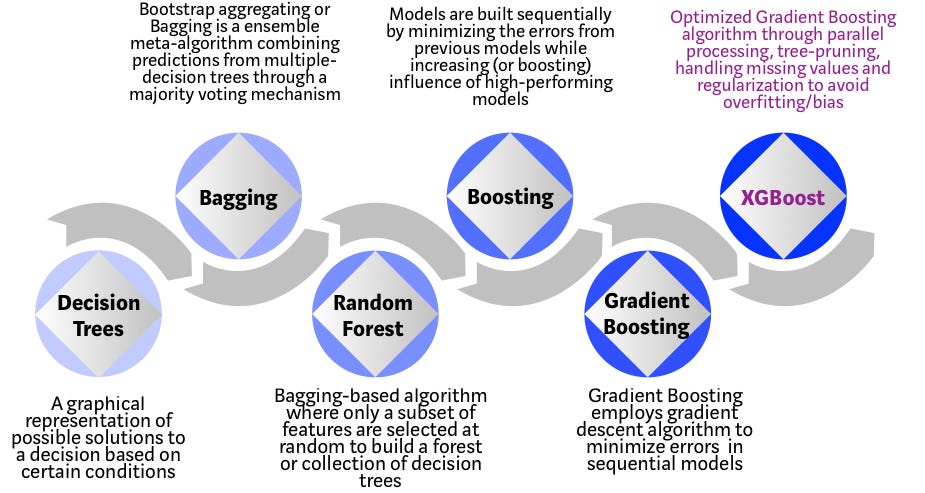

The implementations of this technique can have different names most commonly you encounter Gradient Boosting machines abbreviated GBM and XGBoost.

. In Adaboost shortcomings are identified by high-weight data points. It worked but wasnt that efficient. AdaBoost is the original boosting algorithm developed by Freund and Schapire.

The base algorithm is Gradient Boosting Decision Tree Algorithm. The final prediction is a weighted average of all the weak learners where more. So whats the differences between Adaptive boosting and Gradient boosting.

Its training is very fast and can be parallelized distributed across clusters. AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition. XGBoost versus Random Forest.

AdaBoost Adaptive Boosting AdaBoost works on improving the. Gradient Boosting is also a boosting algorithm hence it also tries to create a strong learner from an ensemble of weak learners. On the other hand Gradient Boosting is a generic algorithm that assists in searching the approximate solutions to the additive.

It is a decision-tree-based ensemble Machine Learning algorithm that uses a gradient boosting framework. Originally AdaBoost was designed in such a way that at every step the sample distribution was adapted to put more weight on misclassified samples and less weight on correctly classified samples. XGBoost is particularly popular because it has been the winning algorithm in a number of recent Kaggle competitions.

If you are reading this it is likely you. XGBoost computes second-order gradients ie. XGBoost is more regularized form of Gradient Boosting.

AdaBoost is the first designed boosting algorithm with a particular loss function. Answer 1 of 2. Key differences of Gradient boosting and AdaBoost.

A learning rate and column subsampling randomly selecting a subset of features to this gradient tree boosting algorithm which allows further reduction of overfitting. The different types of boosting algorithms are. A very popular and in-demand algorithm often referred to as the winning algorithm for various competitions on different platforms.

1 Gradient Boosting. Difference between xgboost and gradient boosting Written By melson Friday March 18 2022 Add Comment Edit As such XGBoost is an algorithm an open-source project and a Python library. At each boosting iteration the regression tree minimizes the least squares approximation to the.

R package gbm uses gradient boosting by default. XGBoost delivers high performance as compared to Gradient Boosting. Gradient Boosting was developed as a generalization of AdaBoost by observing that what AdaBoost was doing was a gradient search in decision tree space aga.

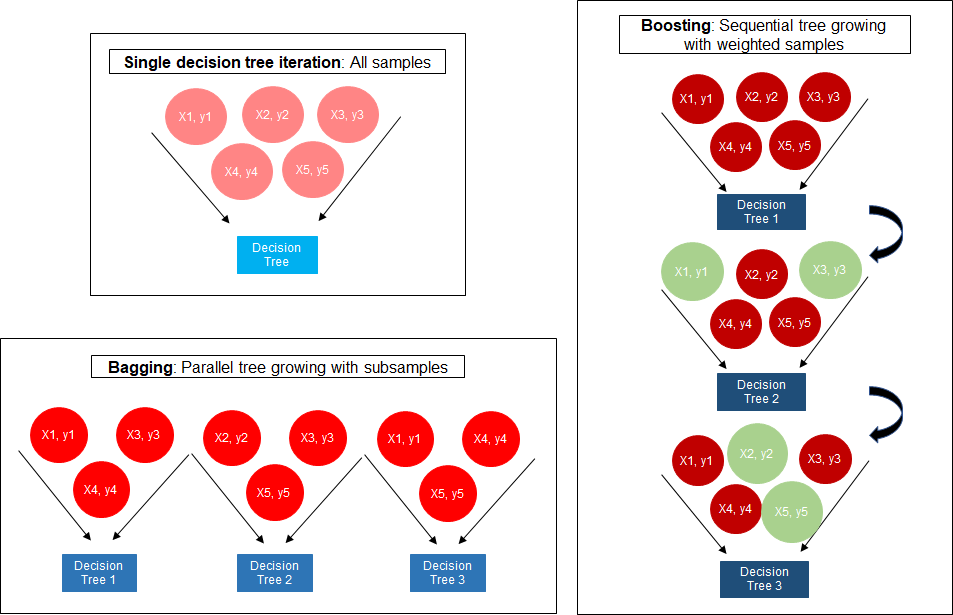

The general idea of boosting algorithms is to try predictors sequentially where each subsequent model attempts to fix the errors of its predecessor. The important differences between gradient boosting are discussed in the below section. This algorithm is an improved version of the Gradient Boosting Algorithm.

Xgboost is an implementation of gradient boosting and can work with decision trees typical smaller trees. Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of gradient boosting. While you cannot find this useful L1 loss function in XGBoost you can try to compare Yandexs implementation with some of the custom loss functions written for XGB.

XGBoost was created by Tianqi Chen and initially maintained by the Distributed Deep Machine Learning Community DMLC group. In Gradient Boosting shortcomings of existing weak learners are identified by gradients. In our blog of decision tree vs random forest before we go into the reasons for or against either of the algorithms allow us to examine the core idea of both the algorithms briefly.

Boosting is a method of converting a set of weak learners into strong learners. Both are boosting algorithms which means that they convert a set of weak learners into a single strong learner. If it is set to 0 then there is no difference between the prediction results of gradient boosted trees and XGBoost.

2 Gradient Boosted Trees. XGBoost or extreme gradient boosting is one of the well-known gradient boosting techniques ensemble having enhanced performance and speed in tree-based sequential decision trees machine learning algorithms. The latter is also known as Newton boosting.

The algorithm is similar to Adaptive BoostingAdaBoost but differs from it on certain aspects. Gradient boosting can be more difficult to train but can achieve a lower model bias than RF. In addition Chen Guestrin introduce shrinkage ie.

3 Extreme Gradient Boosting. XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. With Gradient Boosting any differentiable loss function can be utilised.

Each tree is trained to correct the residuals of previous trained trees. They both initialize a strong learner usually a decision tree and iteratively create a weak learner that is added to the strong learner. XGBoost is basically designed to enhance the performance and speed of a Machine Learning model.

Gradient boosting is made up. Generally XGBoost is faster than gradient boosting but gradient boosting has a wide range of application. Similar to Random Forests Gradient Boosting is an ensemble learner.

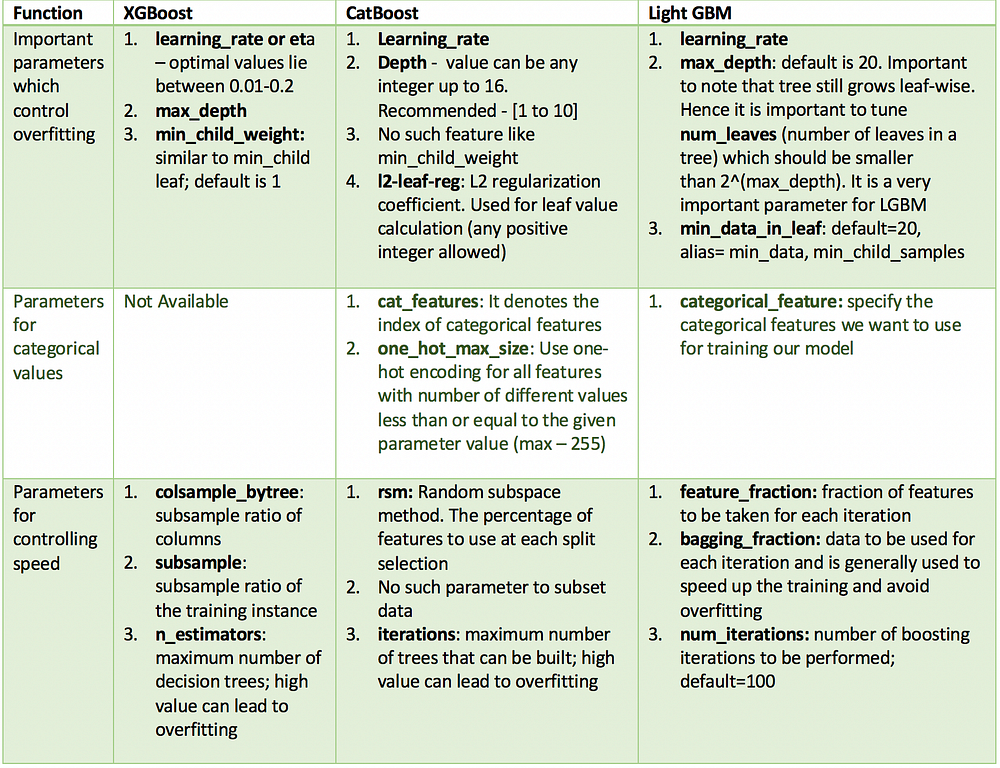

Besides CATBoost works excelently with categorical features while XGBoost only accepts numeric inputs. XGBOOST stands for Extreme Gradient Boosting. XGBoost is faster than gradient boosting but gradient boosting has a wide range of applications.

For noisy data bagging is likely to be most promising. GBM uses a first-order derivative of the loss function at the current boosting iteration while XGBoost uses both the first- and second-order derivatives. Gradient Boosting algorithm is more robust to outliers than AdaBoost.

Adaboost increases the performance of all the available machine learning algorithms and it.

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

The Structure Of Random Forest 2 Extreme Gradient Boosting The Download Scientific Diagram

Comparison Between Adaboosting Versus Gradient Boosting Numerical Computing With Python

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

Boosting Algorithm Adaboost And Xgboost

Mesin Belajar Xgboost Algorithm Long May She Reign

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

0 comments

Post a Comment